ffmpeg for clipping and GLMs

Published:

ffmpeg for clipping and GLMs

Just a quick summary of what I’ve been working on recently.

ffmpeg for clipping

For nearly the past year, but effectively only since March or so, I’ve been actively maintaining yd_, a YouTube channel where I translate and upload videos of various Japanese Virtual YouTubers’ (VTubers) streams (Figure 1).

My YouTube channel's page as of November 15, 2022.

Introduction to “clipping”

Clipping, the process of cutting down a video and uploading it on YouTube, essentially requires six steps:

- Finding a clip

- Downloading the clip

- Video editing, e.g. splicing, adding transitions, etc.

- Translation

- Creating a thumbnail

- Uploading

Below, I cover steps 1-5 and introduce some of the tools I’ve worked on to help make this process easier.

Finding clips.

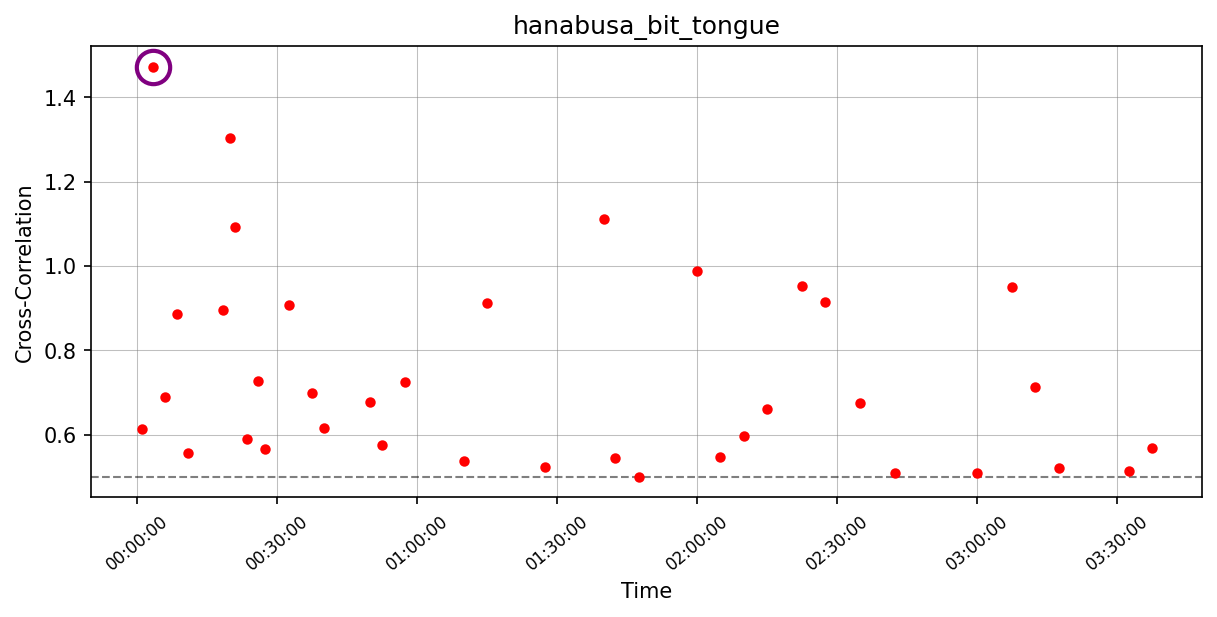

In general, I find clips by watching Japanese clips. A smaller number of my clips are found by watching streams. However, since streams can be several hours long (or days, even), it can be difficult to find where in a five-hour stream a 10-minute clip originates. To address this, I developed YouTubeClipFinder, a very simple tool based on scipy’s scipy.signal.correlate to find where (in time) a clip originates from a longer stream. It first constructs a number of ‘bins’ (e.g. 0-5 minutes, 5-10 minutes, etc.), downloads the audio in each bin from the original stream, and computes the cross correlation between the bin and the query clip (Figure 2).

However, nowadays, I prefer simply searching the transcript and chat replay of a stream, as it’s usually much faster and easier. Consequently, I haven’t touched any of the code above in months. Nonetheless, there are cases when transcripts and chat replay are not available (either in the query clip or the stream itself), which is when this software may still prove useful.

Downloading clips.

This is perhaps the most straightforward task of clipping, since all it requires is piping (am I using this word correctly?) an ffmpeg command that trims the input from an yt-dlp command. To make things slightly more convenient, I wrote a script (download.py) that automates the command construction and provides CLI and GUI input methods (Figure 3). Input is logged, which allows source monitoring and is extremely useful for writing video captions. Most recently, I updated the script to automatically load the inputs from the last downloaded video.

Video editing.

At first, I didn’t do any editing. Eventually, I picked up After Effects and Premiere Pro, but ditched the former after one video due to the steep learning curve. Then, for about ~70 videos, I used Premiere Pro exclusively.

However, most recently, I began developing several tools to automate common editing tasks: removing intervals of silence, cross-fading video and audio, reading and writing subtitle files, etc. These scripts are located in haganenoneko/ClippingTools (github.com). The most notable/useful ones are:

[remove_silence.jl], which removes intervals of silence from a video. It takes various input arguments, including a minimum silence duration, a maximum silence volume, and minimum non-silence duration. For example, if given a 100ms minimum silence duration, the script will ignore any intervals of silence longer than 100ms. Clearly, excising miniscule intervals can severely interrupt the flow of conversation and create a disruptive viewing experience. A similar result occurs if very short non-silent intervals are included in a video. This is probably the script I use the most at the moment. In the future, I’d like to add a bit of padding between concatenated non-silent intervals.

The reason why remove_silence.jl is written in Julia rather than Python (unlike the other scripts I’ve written) is because I didn’t trust Python to efficiently handle the amount of audio data in longer videos. However, Julia doesn’t have a good audio input-output package, so I ended up calling uadiofile, a Python package, with PyCall.jl. I haven’t actually tested whether I can get good performance using Numpy to process the audio data.

overlay.py is a script that scales and overlays images in different arrangements on top of a video. This is a routine editing procedure in the VTuber clipping field to visually indicate the identity of speakers (e.g. Ichinose and Kanae bicker for 10 minutes. [Nijisanji/VSPO] ENG SUB - YouTube).

crossfade.py does what the name says, and crossfades a sequence of video clips.

There are a couple of other scripts, but these are the ones I use most often. Others can be found here: ClippingTools/MainClippingTools at main · haganenoneko/ClippingTools (github.com)

Translation.

Translation is done entirely in Aegisub, which can do a surprising number of tasks despite being an old piece of software. There are a couple neat tricks I like to use to make translation easier, e.g.

- Seeking through the video and using “Split video at current time frame” to determine timecodes on-the-fly, rather than setting them up separately. This does not work well when translating videos with multiple speakers, though.

- I use prefices such as “C: “ and “A: “ to indicate lines that correspond to different speakers. I then select all such lines using a simple regex pattern, e.g.

^C\:\s, and then apply the desired style (i.e. colour, font family, font size, etc.)

Once translated, subtitles are hardcoded (added to the video) through a script called burn.py. It again uses ffmpeg, and is extremely simple, so there isn’t much to mention about it.

However, once substitles are hardcoded, I sometimes have sections of the video that I want to remove. Sometimes, these are silent intervals that weren’t detected because of incompatible inputs to remove_silence.jl, or they may be parts of the video that I simply don’t want to translate. In these cases, I run concat_video_from_ass.py, which, as the name suggests, removes any parts of the video that are not represented in the ASS file.

Quantitative insights into the VSPO clipping community.

I’ve mentioned a couple minor improvements and features I’d like to add, but I think that the overarching goal of this work is to democratize and streamline clipping as much as possible. This is because the English VTuber fanbase is, in theory, very large, yet heavily skewed towards two organizations - namely, Hololive and Nijisanji. These are the two largest VTuber agencies to-date by YouTube subscribers. In contrast, I focus my clipping activities on the third-largest agency, VSPO. Unfortunately, they remain largely obscure to the English fanbase and do not employ official translators.

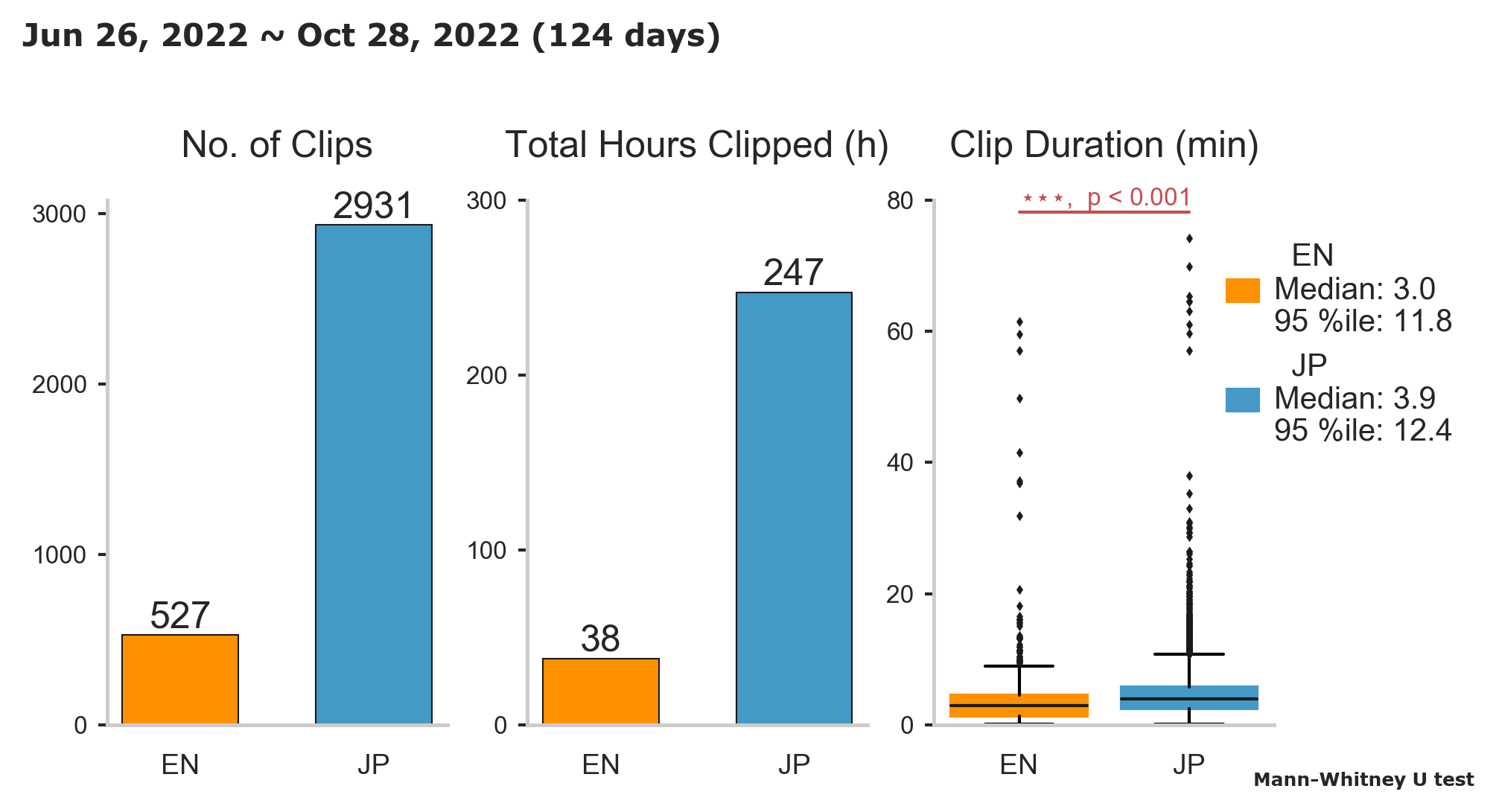

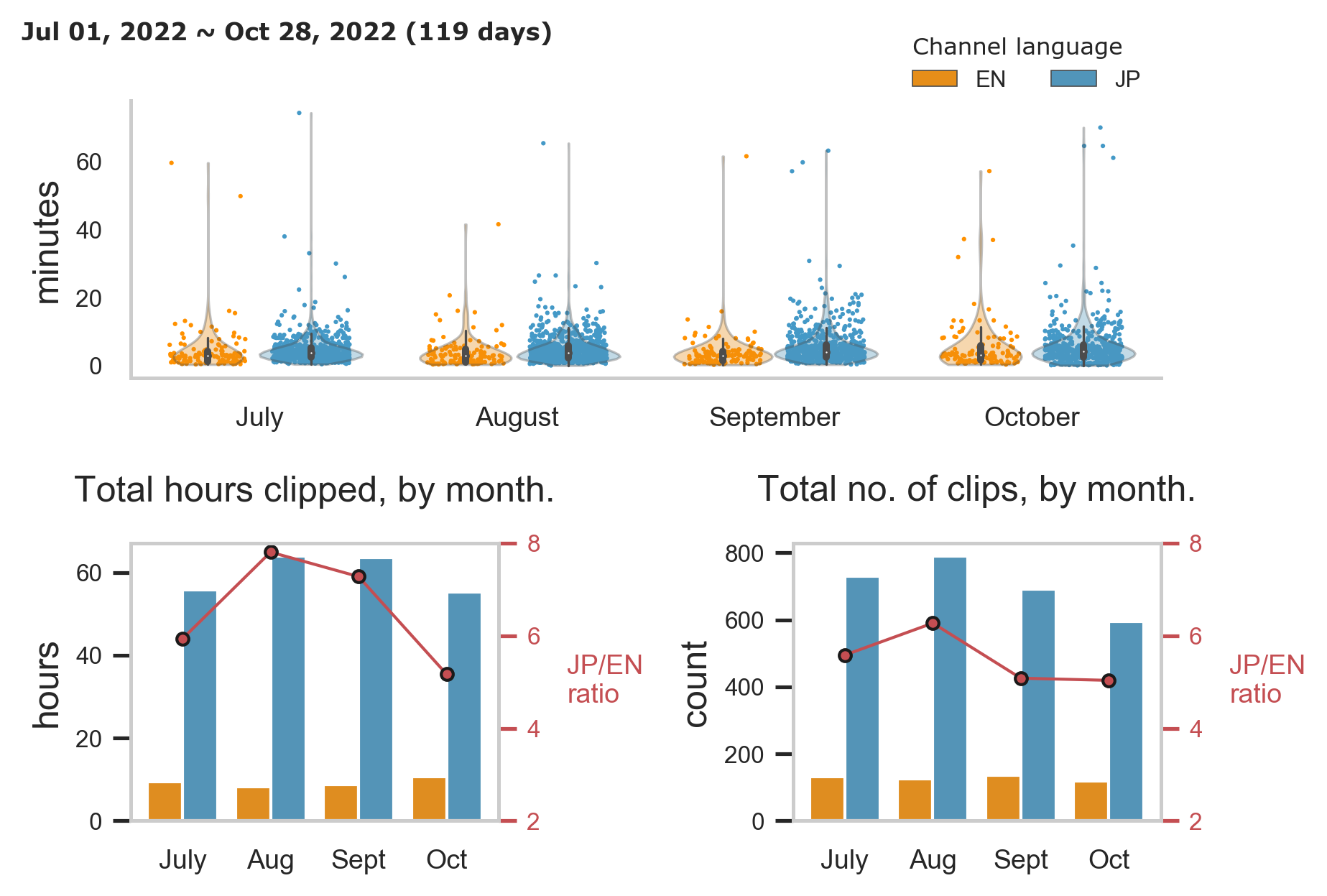

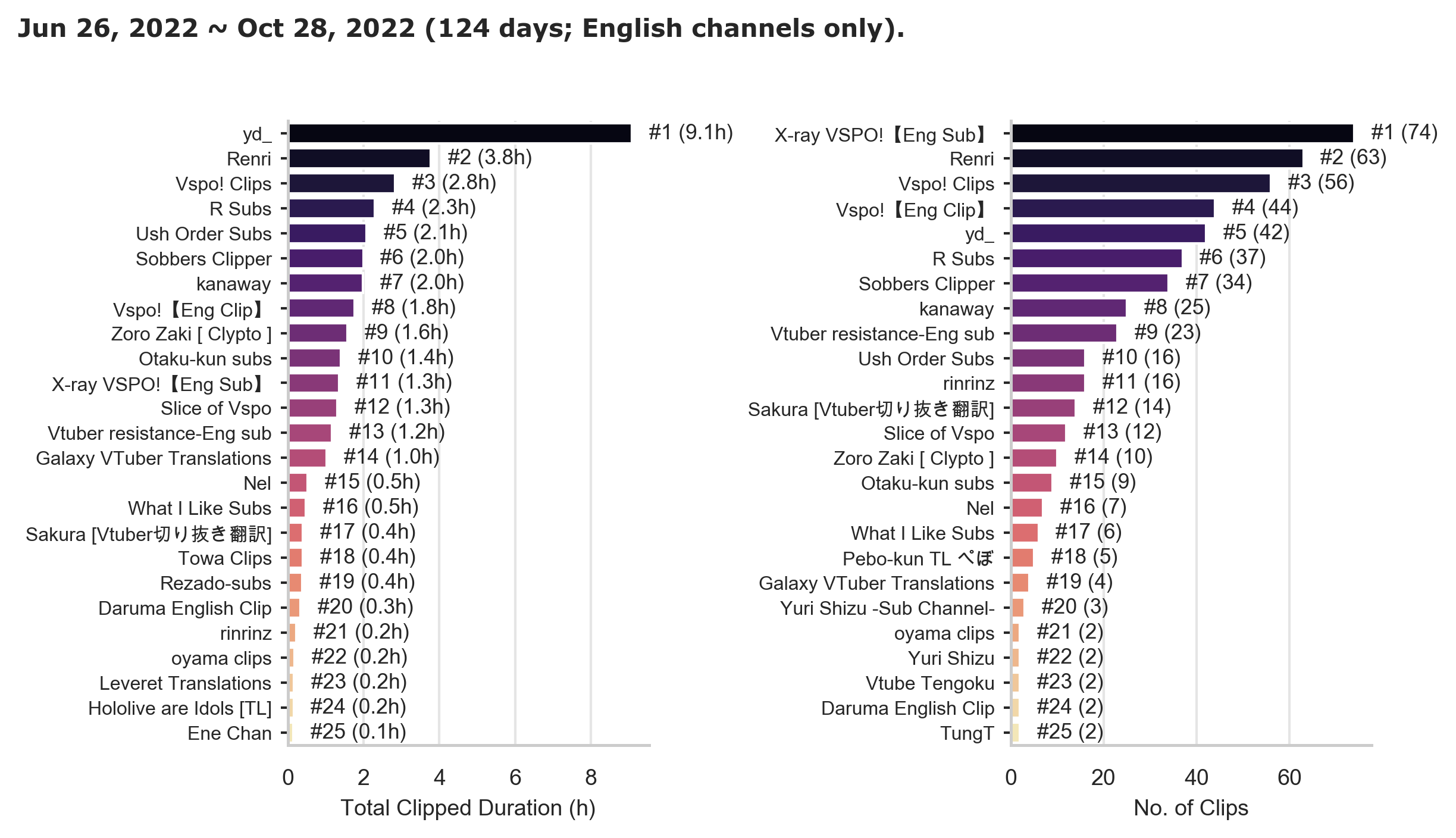

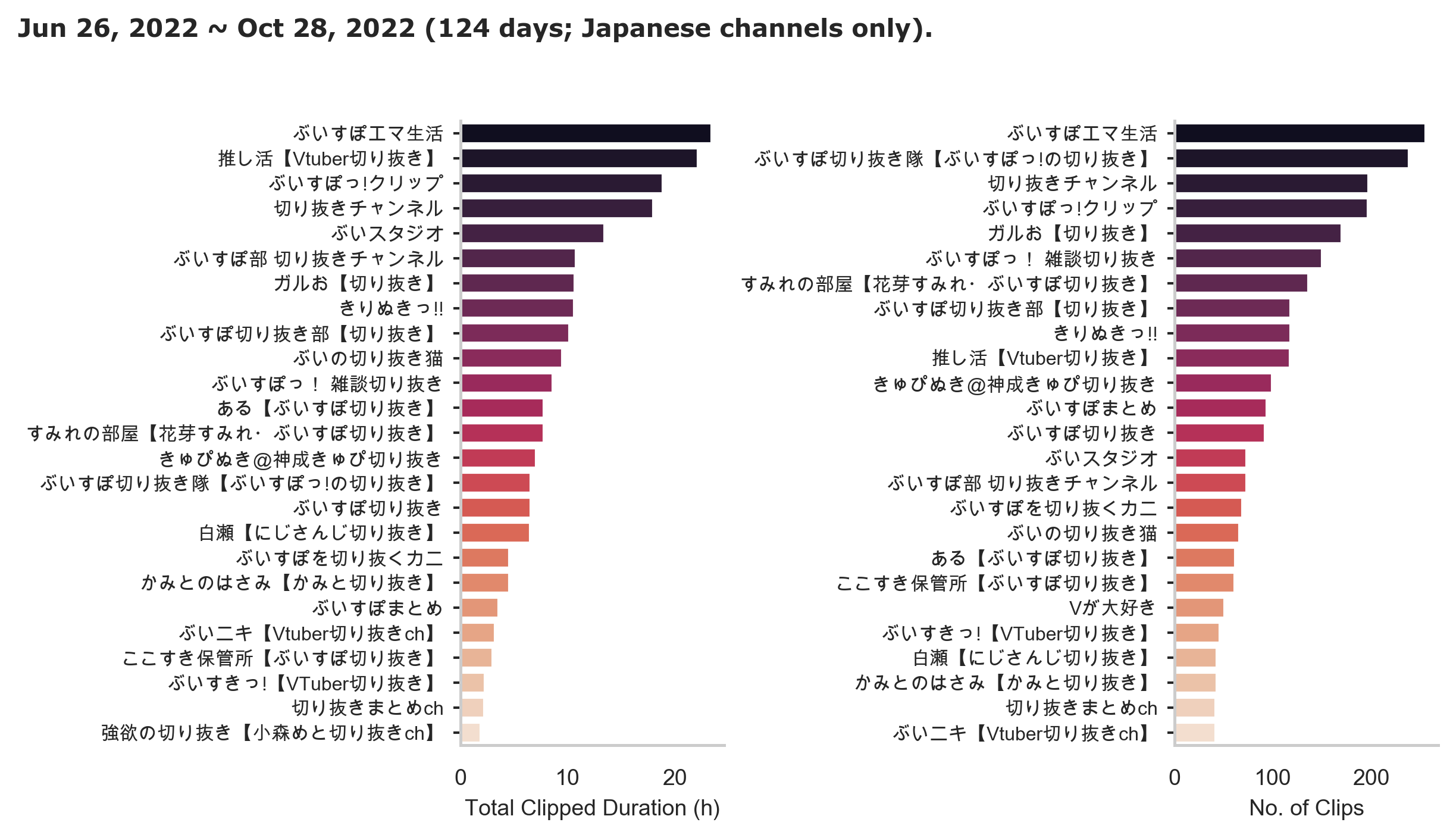

Moreover, the English (EN) clipping community produces far less content than the Japanese (JP) clipping channels (“clippers”). For instance, Japanese clippers produce about 6x more clips (both duration and number; Figures 4 and 5).

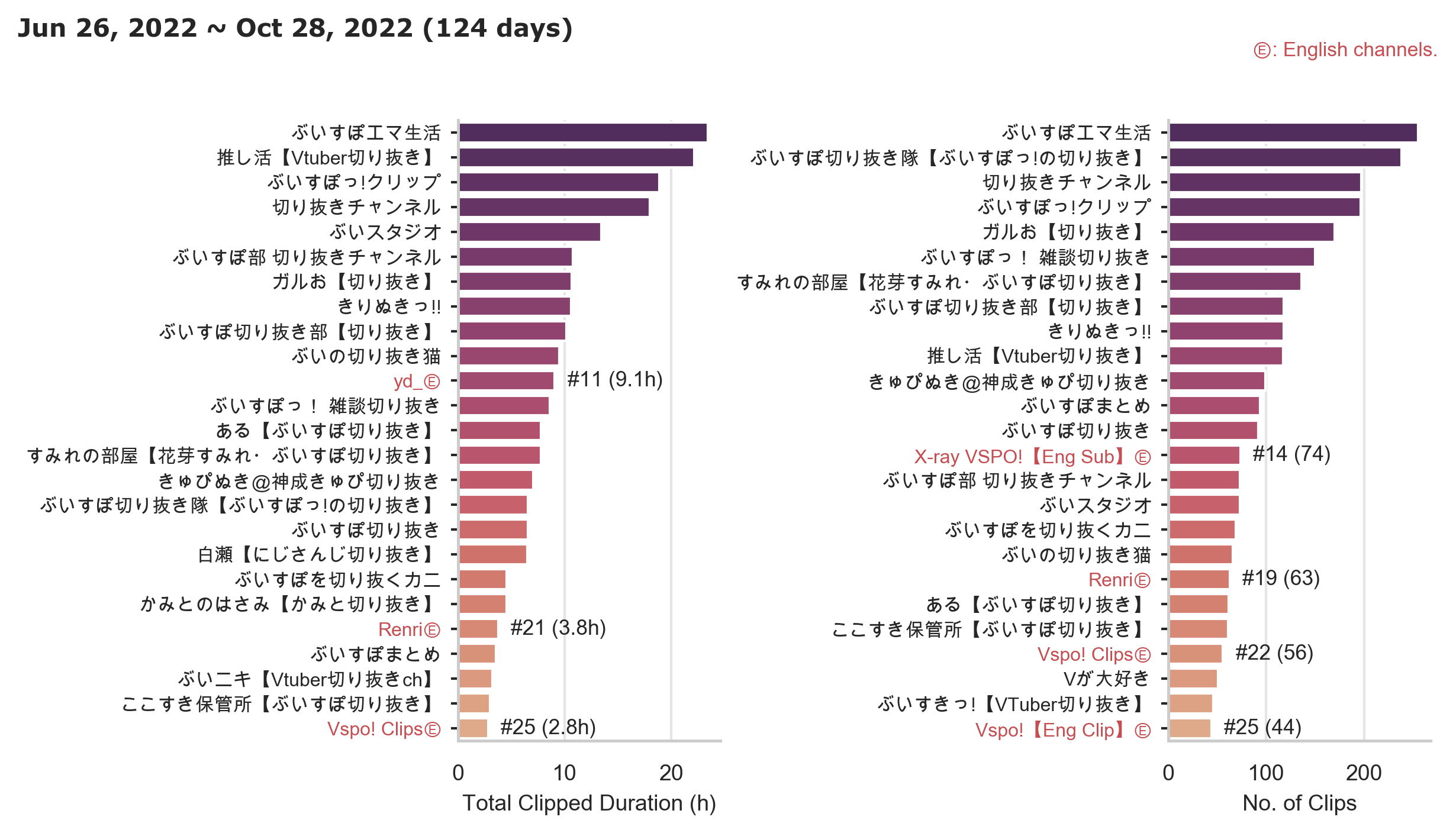

However, there are some promising observations. First, several English channels rank among the top producers of VSPO content, either by the number of videos and/or by total video duration (Figure 6). However, having ‘super uploaders’ alone is not enough, since, as seen in Figure 4, the distribution upload duration is extremely skewed - most channels, whether EN or JP, don’t upload very much (Figures 7 and 8). By making it easier to edit and translate videos, we should be able to address this imbalance by simultaneously increasing the number of clippers and making it easier to produce long(er) videos.

Conclusion

I believe that a major boost to the community can come from democratizing the tools I’ve made and the insights I’ve learned from creating over 100 videos. Thus, the end-goal of this project will be to package them into some form of user-friendly application. While I’ve been interested in TypeScript for some time and contemplated learning Electron for this purpose, I think that the most feasible option will be to simply compile everything with pycompile, or otherwise provide a virtual environment and add a fully fleshed-out GUI, e.g. with PyQt5.

GLM studying.

I just wanted to write about what I’ve been doing recently. The work above was largely done several months back, and I’ve shared some of it in YouTube community posts on my channel, so it’s not quite representative of things that I’m actively working on. Of course, I’m unfortunately still a NEET, which explains why I can afford the time to work on these mundane questions and write a blogpost at 4:30 AM.

One of the ways I’ve always enjoyed staving off boredom and escaping reality has been to read and do math problems. For instance, after graduating, I took 6.431x and 18.651x - probability and statistics, respectively - through edX, and found these courses challenging and fun, although I didn’t quite understand the last few topics in both courses.

Specifically, I grew very interested in the topic of generalized linear models, or GLMs, because I wanted to understand how one could model categorical data with continuous functions and obtain continuous outputs (e.g. probabilities). I tried various resources, but, because I’m not particularly strong at math, I often found myself stuck and having to review linear algebra, multivariable calculus, basic statistics, etc.

Then, after about 4-5 months of not doing any serious statistics, I recently started reading and doing exercises in Dobson’s Introduction to GLMs, which I luckily had on hand. In fact, I had tried reading some sections before - if I recall, while studying GLMs in 18.651x - but the discussion was too advanced for me and I therefore dismissed the utility of the book as a learning resource. However, after starting from chapter 1, I found that the exercises are very reasonable - of course, they are very simple, but hard for me because I’m ‘bad at math’ - and the presentation of the material mostly intuitive; there are a few places where I wished the author hadn’t focused so much on GLMs and simply referred to general concepts as they are. Granted, I haven’t progressed much through the book, yet, so this may all come together in due time.

Anyhow, I’m taking a lot of notes again in Obsidian, keeping Julia notebooks for the exercises, and think that it’s definitely within the realm of possibility to actually read the book cover to cover within the next few months. An extremely useful resource is statisticsmatt - YouTube, a YouTube channel focused on advanced statistics, including GLMs and experimental design (which I hope to tackle after GLMs and GAMs).

I’m only on chapter 5 right now, so I haven’t yet seen anything like proportional odds, logit or probit regression, etc., but I skimmed the chapter and saw an extensive discussion of deviance and goodness of fit testing. These concepts always confused me, even when I was reading Agresti’s book on Categorical Data Analysis, so I’m really looking forward to finishing the chapter and trying out the exercises.

However, I’m having a hard time remembering basic statistics, e.g. consistency and biasedness of sample statistics, equations for various PDFs, etc., so it’s going to be a bit difficult because of that. Also, I’ve once again found that I’ve forgotten a lot of linear algebra. Well, that never changes, huh? I feel like I complain about this every year.

Besides GLMs, I’m also back to studying Japanese. I’d like to get to a level where JLPT N2 is within reach. I’m currently focusing on reading, and try to read a BBC article in Japanese every one or two days.

That’s it for now, hopefully my formatting script formats this properly.